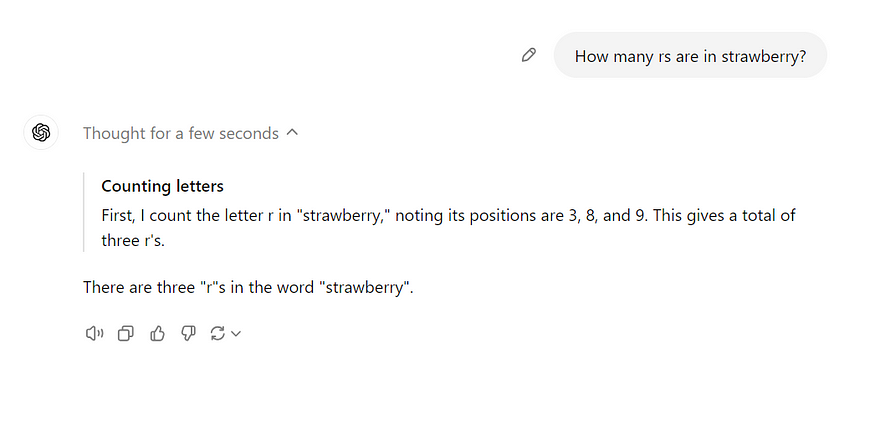

ChatGPT o1-preview (Strawberry) succeeds at Monty Hall — and counts Alice’s siblings

A couple of months ago, researchers noted that there was an extremely simple logic puzzle that nearly every AI would fail to solve: “Alice has N brothers and she also has M sisters. How many sisters does Alice’s brother have?”

While the answer (M + 1) is obvious to most people, it was a profound stumbling block for most AI models.

The release of o1-preview (Strawberry) seemed a particularly apt time to test some classic logic puzzles against modern LLMs. According to OpenAI, o1-preview is a window into a new series of reasoning models for solving hard problems — representing a huge jump in reasoning efficacy compared to 4o.

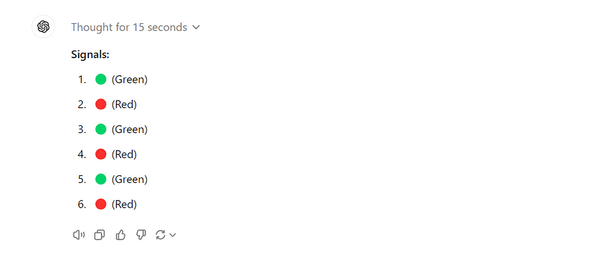

And it does seem to improve upon ChatGPT’s reasoning ability. Not only does it draw conclusions, but it tells the user how it arrived at those conclusions.

(I particularly like the phrase “Thought for a few seconds” — anchoring, a bit, the concept that the machinery is, in fact, thinking.)

When asked a handful of elementary-level logic tests, it did performed about the same as 4o — in a good way. Both 4o and o1-preview answered elementary-level logic puzzles — and even the infamous Monty Hall puzzle — correctly.

But interestingly enough, it still couldn’t answer the question about Alice’s siblings… or could it?

QUESTION: Alice’s Siblings

Q: Alice has N brothers and she also has M sisters. How many sisters does Alice’s brother have? (Source: Arxiv)

A: M + 1

RESPONSE: ChatGPT o1-preview

Alice’s brother has M sisters.

Explanation:

- Alice has M sisters, including herself.

- Her brothers share the same sisters as Alice does.

- Therefore, each of Alice’s brothers has M sisters, including Alice.

RESPONSE: ChatGPT 4o

Alice has M sisters. Since her brother has the same siblings as Alice, except for Alice herself, her brother has M sisters as well.

Thus, Alice’s brother has M sisters.

But then something interesting happened…

While I was still testing, o1 Strawberry started giving the right answers to the Alice problem. And quite consistently, too.

While it had failed the Alice problem 3 hours after launch, it now consistently solves it roughly 7 hours after launch (note that all testing was done in “temporary chat” mode, which does not retain previous information).

Is it learning? Or was it just a fluke?

RESPONSE: ChatGPT o1-preview

To determine how many sisters Alice’s brother has, let’s analyze the family structure step by step:

Total Sisters in the Family:

- Alice has M sisters (excluding herself).

- Including Alice, there are M + 1 sisters in total.

Alice’s Brothers:

- Alice has N brothers.

Total Siblings in the Family:

- Total children = N brothers + M sisters + Alice = N + M + 1.

Sisters of Alice’s Brother:

- Alice’s brother has all the same siblings as Alice, except himself.

- Therefore, his sisters are all the girls in the family.

- Number of sisters = M + 1 (including Alice).

Answer: M + 1 sisters.

QUESTION: Monty Hall

Q: Suppose you’re on a game show, and you’re given the choice of three doors: Behind one door is a car; behind the others, goats. You pick a door, say №1, and the host, who knows what’s behind the doors, opens another door, say №3, which has a goat. He then says to you, “Do you want to pick door №2?” Is it to your advantage to switch your choice? (Source: Wikipedia)

A: Yes.

Monty Hall is considered a simple enough problem now, but for a while, it was quite controversial.

If I asked the Monty Hall problem of any given LLM, I would suspect that it would give me the right answer because the Monty Hall question has been asked so many times. So, I wasn’t entirely surprised when both o1-preview and 4o were able to answer the question correctly.

RESPONSE: ChatGPT o1-preview

Yes, it is to your advantage to switch your choice. Here’s why:

Initial Choice (1/3 Chance):

- When you first pick a door, there’s a 1 in 3 chance (33.3%) that the car is behind your chosen door.

- This means there’s a 2 in 3 chance (66.7%) that the car is behind one of the other two doors.

Host’s Action:

- The host, who knows what’s behind the doors, opens another door that always has a goat.

- This action doesn’t change the initial probabilities but provides additional information.

Revised Probabilities:

- Your initial choice still has a 1/3 chance of being correct.

- The unopened door now carries the combined probability of the two doors you didn’t pick initially, which is 2/3.

Conclusion:

- By switching, you effectively choose the door with a 2/3 chance of having the car.

- Staying with your original choice keeps you at a 1/3 chance.

Therefore, switching doors doubles your probability of winning the car from 33.3% to 66.7%.

Answer: Yes; switching doors doubles your chance of winning — the odds increase from 1⁄3 to 2⁄3 if you switch.

RESPONSE: ChatGPT 4o

Yes, it is to your advantage to switch your choice. This scenario is a classic example of the Monty Hall problem. Here’s why:

- Initially, the chance of the car being behind the door you picked (door №1) is 1/3, and the chance of the car being behind one of the other two doors is 2/3.

- When the host opens a door with a goat (which he will always do, since he knows where the car is), the probability of the car being behind the other unopened door (door №2 in this case) remains 2/3.

- If you stick with your original choice (door №1), your probability of winning the car is still 1/3.

- However, if you switch to the other unopened door (door №2), your probability of winning the car increases to 2/3.

Thus, statistically, switching gives you a better chance of winning the car.

But here’s something else that’s interesting…

Is the machine just answering what it knows to be true of the Monty Hall problem? Or is it truly reasoning? To dig a little deeper, Idecided to alter the premise. The original Monty Hall problem is counterintuitive primarily because it uses three doors in the example. I changed that to eleven.

Q: Suppose you’re on a game show, and you’re given the choice of eleven doors: Behind one door is a car; behind the others, goats. You pick a door, say №1, and the host, who knows what’s behind the doors, opens another door, say №3, which has a goat. He then says to you, “Do you want to pick door №2?” Is it to your advantage to switch your choice? (Source: Wikipedia)

RESPONSE: ChatGPT o1-preview

No, in this specific scenario, it is not to your advantage to switch your choice. Here’s why:

Initial Choice (1/11 Chance):

- When you first pick a door out of 11, there’s a 1 in 11 chance (approximately 9.09%) that the car is behind your chosen door.

- There’s a 10 in 11 chance (approximately 90.91%) that the car is behind one of the other ten doors.

Host’s Action:

- The host, knowing what’s behind the doors, opens one door (door №3) that has a goat.

- He then offers you the option to switch to door №2.

Understanding the Host’s Strategy:

- Critical Difference from the Classic Monty Hall Problem:

- In the classic problem, the host always opens a goat door and offers you the chance to switch to the only remaining unopened door, effectively consolidating the probabilities.

- In this modified scenario, the host opens just one goat door but doesn’t eliminate all other unopened doors except one. There are still nine other unopened doors besides your initial choice and door №2.

Host’s Offer Doesn’t Provide Additional Information:

- Since the host could have opened any of the other doors with goats and chosen any door to offer (door №2 in this case), his action doesn’t give you meaningful information about where the car might be.

- The probability that the car is behind door №2 remains at 1/11, the same as any other unopened door.

Probabilities Remain Unchanged:

- Your initial choice (door №1) still has a 1/11 chance of having the car.

- Door №2 also has a 1/11 chance.

- The remaining unopened doors collectively hold the remaining probability.

Conclusion:

- Switching to door №2 does not increase your chances of winning; both staying and switching offer the same probability (1/11).

- Therefore, there is no statistical advantage in switching your choice in this specific scenario.

Answer: No; in this case, switching does not improve your odds — you have the same chance whether you switch or stay.

RESPONSE: ChatGPT 4o

Yes, it is to your advantage to switch your choice. This is a variation of the well-known Monty Hall problem, where switching doors increases your chances of winning.

Here’s how it works:

- Initial Probability: When you first pick a door (say Door 1), the chance that the car is behind that door is 1 out of 11 (since there are 11 doors), or about 9.09%. The chance that the car is behind one of the other 10 doors is 10 out of 11, or about 90.91%.

- The Host’s Action: The host knows where the car is, and he opens a door that has a goat. This action doesn’t change the fact that you had only a 1/11 chance of picking the car initially, and a 10/11 chance that the car is behind one of the other doors.

- After the Host Opens a Door: When the host opens a door (say Door 3) with a goat, it provides you with additional information. If the car were behind one of the other doors, excluding Door 3, the host’s action effectively narrows the possibilities to the remaining unopened doors.

- Switching Strategy: Since there was a much higher chance (10/11) that the car was behind one of the other doors initially, switching increases your odds. After the host opens a door with a goat, you’re essentially redistributing that 10/11 probability among the remaining unopened doors, which makes switching statistically more favorable.

In short, your initial choice had a very low probability of being correct, and by switching, you take advantage of the host’s knowledge and increase your odds of winning from 1/11 to about 10/11. So, yes, you should switch.

But the fun doesn’t stop there…

I’ll leave the rest out: I asked o1-preview and 4o a battery of elementary logic puzzles and, interestingly, both of them got the answers to the other questions correct.

But o1-preview did have a distinct advantage — it revealed its “thought” processes.

And the ability to see those thought processes gave me an inclination: I wonder how ChatGPT o1-preview understands the concept of lying?